The boosting algorithm is a machine learning technique used for regression or classification problems. Boosting algorithms are used to overcome the drawbacks of basic and simple learning algorithms. In this article, I’m going to talk about 8 different types of boosting algorithms and discuss ways of its individual implementation in our future post.

You might be wondering: "What is a boosting algorithm?"

Well, my friend, you’ve come to the right place.

A boosting algorithm is a machine learning method that can be used to improve the performance of other machine learning algorithms.

Because they are designed to work together, boosting algorithms are often best suited for problems with lots of features and/or large datasets.

With its roots in psychology, machine learning is one of the most promising fields in artificial intelligence. It’s a way for machines to learn without being explicitly programmed, and it can be applied to huge swathes of real-world situations.

Collections of machine learning algorithms make up the vast majority of AI technology out there, including spam filters, Google search, and facial recognition systems.

Of all the machine learning algorithms available, boosting is one of the most popular and effective. It works by iteratively improving a model using data features that are weak or incorrect.

Boosting is used in everything from recommendation engines to fraud detection, but it can be tricky—a lot goes into not just creating a model but also making sure it’s best suited for your needs.

Gradient Boosting algorithms are used for improving the efficiency of decision trees and linear classifiers. The need for boosting a particular classifier arises when the training set that is available is not very clean, meaning there are a lot of error and noise in it, which could affect the performance of the model.

The algorithm is used to develop a model in which the predictions are more accurate than the base model. The prediction accuracy is increased by combining different classifiers.

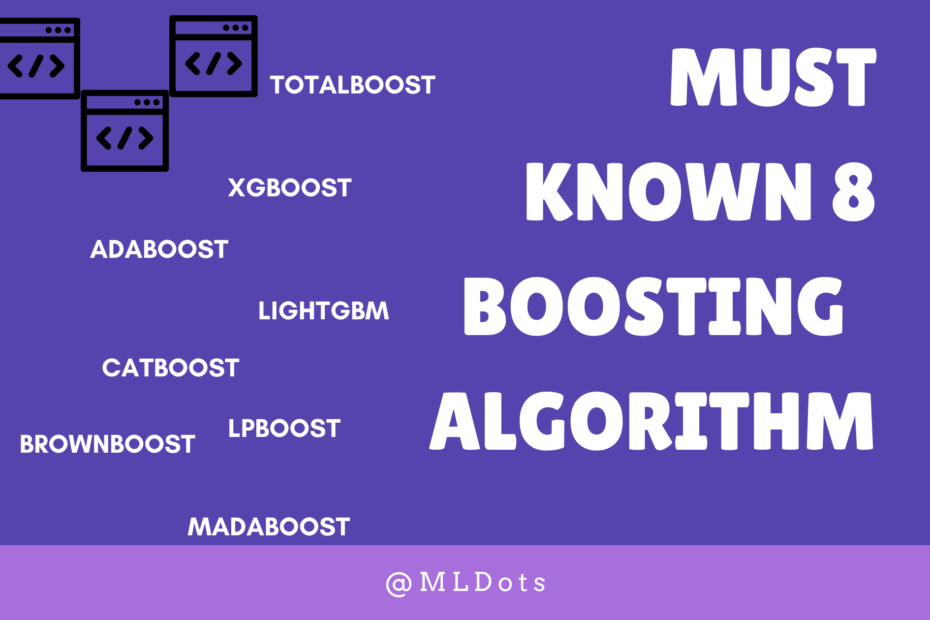

Now, let see the must known 8 different boosting algorithm:

- Adaboost

- XGBoost

- LightGBM

- CatBoost

- LpBoost

- TotalBoost

- BrownBoost

- MadaBoost

- Adaboost : Adaboost is a machine learning algorithm that uses a series of decision trees to optimize for multiple criteria. For example, it can be used to sort search results or rank news stories according to the set of rules the user has established. The most common implementation of Adaboost is an improvement over a non-boosting decision tree learner.

The boosting algorithm works by iteratively identifying the misclassified instances and reweighting them in order to prevent further misclassifications. The algorithm is applied recursively until no further misclassifications occur.

Adaboost is suited for classification problems where there are more instances than features, and each instance must be assigned to only one class.

Adaboost has been applied in many real-world applications including:

-Search results ranking

-Recommender systems

-Data compression

-Speech recognition (the LDA algorithm)

2. XGBoost : XGBoost is a boosting algorithm that implements gradient boosting. It’s used for a wide variety of applications, from predictive modeling to cluster analysis.

XGBoost requires a binary classification problem with features that are continuous and numerical. The training data must have one or more columns with examples of the target class and additional columns with numerical features.

The XGBoosting algorithm learns to predict the labels by combining the base learners. Boosting algorithms are powerful tools for solving classification problems. The XGBoost framework provides an intuitive interface that allows users to quickly write their own machine-learning models without having to code them from scratch.

3. LightGBM : LightGBM is a boosting algorithm developed by Microsoft and the University of Washington. It’s used to train decision trees, which are capable of making accurate predictions with large data sets.

LightGBM is fast—a typical tree can be built in under 10 seconds. It’s also more accurate than other open source options, such as XGBoost or CatBoost.

LightGBM trains trees using gradient boosting, an advanced machine learning technique that uses iterative improvement and multiple passes over the data to increase its accuracy.

LightGBM stands out because it’s faster and more accurate than most alternatives. LightGBM has been shown to work best with datasets in the range of hundreds to millions of samples, where XGBoost, for example, begins to struggle.

4.CatBoost : CatBoost is a boosting algorithm for machine learning in general, and for deep learning in particular. It was designed to be more flexible than AdaBoost, with less parameters and the ability to work with any kind of loss function, so users can have more control over the final model and get better results.

We recommend this algorithm for problems with multiple classes (multi-label classification), for medium accuracy levels, and for problems that need to make fast predictions.

5. LPBoost : LPboost is a new, revolutionary boosting algorithm that is designed to be used for learning sentiment classifiers. It has been proven to achieve accuracy of up to 96% on training sets and 96.5% on testing sets when compared to the traditional boosting algorithms.

This algorithm is simple in concept, but complex in design. The algorithm uses a “grin” feature that has been shown to improve results by 6%. However, the algorithm’s impact is not limited to improving sentiment classification; it can be used as an element of any machine learning project.

6.TotalBoost : TotalBoost is a boosting algorithm that can be used in many different situations.

TotalBoost works by first taking all of the negative comments and ratings, and then using them to boost the product’s rating.

The way it works is by taking the negative reviews and adding them to the product’s rating system, which will then give the product more credibility.

This is helpful for any situation where a product has low ratings or bad comments. The customer will see these and feel more confident about their purchase. This also works for websites, as well as any other type of situation where a business may need to boost their credibility.

- Brownboost : Brownboost is one of the most popular variations on the gradient boosting algorithm, used in situations where there are lots of features but they aren’t very useful.

It can be used in scenarios like facial recognition, where there may be hundreds of thousands or millions of features, but each is only slightly more helpful than the others in identifying a specific individual. The Brownboost algorithm combines tree ensembles and gradient boosting by creating a forest of trees that are grown from different random initializations on the training data.

The algorithm is not particularly complex: it’s essentially just a chain of decision trees with very high depth and weak learning rate. Most people who use Brownboost don’t put much thought into it because they don’t need to—it works perfectly fine on its own!

- Madaboost : Madaboost is one of the most powerful and popular boosting algorithms, especially in applications where the training data cannot be split into separate training and test datasets. It is generally used in cases when there is an imbalanced distribution of classes in the dataset.

The algorithm takes a set of weak learners (frequently simple decision trees) as input. Each weak learner produces a prediction that is then combined with other predictions using weighted sums and binary interactions (and an optional logistic function).

This process is repeated for many iterations until there are no more weak learners left and the predictions from all weak learners have been aggregated into a single strong predictor – a decision tree that fits this set of training data perfectly.

Boosting Algorithm is used when you want to create a model that can be improved upon by future models.

Say, you have some records with the output values p(x) and with the model that predicts y = p(x). You may add another model which predicts y’ = p(x) + c. The sum of both predictions should be better than the individual predictions.

This is how boosting works. And it is mostly used for classification and regression problems. The basis for boosting algorithm is the convex combination of models hence the term “boosting”, which adds to previous/existing models incrementally.

For years we have used a variety of techniques to predict the class labels given data. This can be thought of as a variant of Naive Bayes, and the first technique to realize this is called decision trees. Decision tree retains the expressive power of Bayes classifiers, but its computational cost is prohibitive for large amounts of labeled data.

In the last few years, a powerful family of classifiers called boosting algorithms has become popular because it can automatically discover strong classifier sub-trees in a training set.

Boosting trains multiple learners sequentially, e.g., an AdaBoost algorithm trains several decision trees in succession given weighted samples from the training set.

Its high accuracy on many real-life problems has made it the object of intensive research efforts over the past five years since Leo Breiman introduced AdaBoost in 1996. Hope you enjoyed this article . At MlDots we will future post each algorithm in detail with its implementation.